AWS Pipelines with CodePipeline

Paul reviews an example pipeline for releasing a NodeJS application to AWS ECS, then dives into the related tooling and concepts.

In 2015 AWS launched CodePipeline: a suite of tools for automating software release cycles. CodePipeline allows you to configure and monitor the entire cycle by bringing together various AWS developer tools such as CodeCommit, CodeBuild and CodeDeploy.

It can also integrate with a number of third-party services like GitHub, Jenkins and CloudBees.

CodePipeline is broken into several stages and each stage has its own input and output artefacts. This allows you to work with common tooling that may already be integrated into your workflows and culture.

In this post, we will review an example pipeline for releasing a NodeJS application to AWS ECS and then look into the tooling and concepts. I'll also offer some of my own observations whilst migrating to this toolset.

CodePipeline NodeJS release

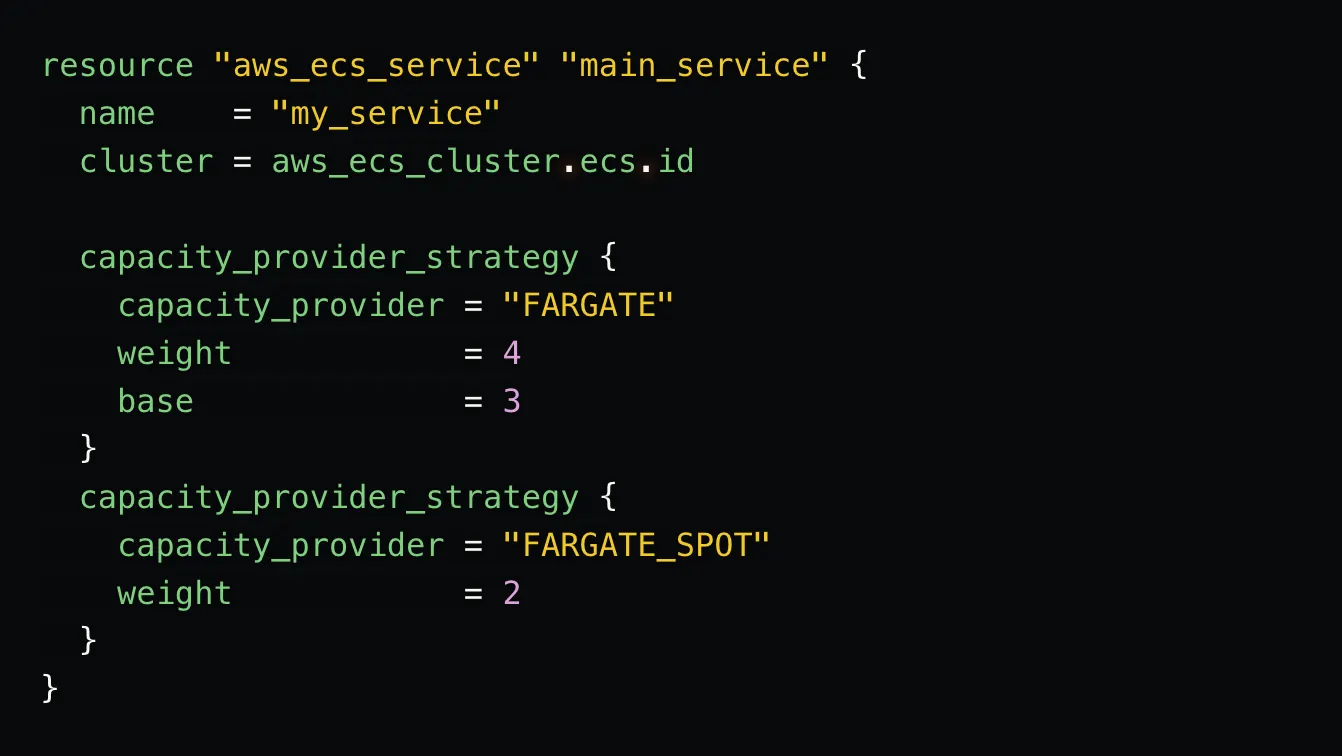

I've created an example CodePipeline using Terraform, a cloud orchestration tool for provisioning infrastructure. You can reference it here: https://github.com/steamhaus/fargate-codepipeline/ This Terraform package will create a pipeline for deploying a NodeJS app to ECS Fargate.__ Be aware that after running this you will likely start to incur charges on your AWS account. __

This pipeline is designed to build a NodeJS app, package it into a container and then deploy it to Fargate. The pipeline flows like this:

- Source stage detects updates to a GitHub repo and pulls in the latest code.

- Build stage launches a container to compile dependencies and build the docker image.

- Approval blocks the build from being deployed to live without authorisation.

- Deploy stage triggers a rolling update to the ECS Fargate task.

This is a very basic example of a release cycle that you might want to implement. However, it incorporates all of the basic services offered by Code*. A larger application may require a series of build steps and tests before approval, or may implement a blue/green or canary style of deployment. All of which can be achieved with CodePipeline.

CodePipeline NodeJS Build

The suggested pipeline uses AWS’s CodeBuild service as a build engine and runner. Other services like Jenkins can be integrated at this stage as well if more control is required or legacy systems need to be built. For the most part, CodeBuild can handle all potential jobs.

The most attractive feature of CodeBuild is that it doesn’t require a dedicated build server. CodeBuild is akin to a Serverless Container Orchestration Engine. All jobs execute inside a container and no underlying hardware needs to be managed. This allows the CodeBuild service to scale to your organisation’s needs and only charges you for the time spent building.

Build containers can be spawned from one of several AWS-provided Docker images, or from a user-provided image. The build procedure is defined in a file called buildspec.yml that lives in the Terraform Repo. Here is the buildspec for this NodeJS/Docker pipeline:

version: 0.2

phases:

install:

runtime-versions:

docker: 18

pre_build:

commands:

- pip install awscli --upgrade --user

- echo 'aws --version'

- echo Logging in to ECR...

- $(aws ecr get- login --region eu-west-1 --no-include-email)

- REPOSITORY_URI=${ repository_url}

- APPLICATION_NAME=${application_name} echo Configured pre-build environment

build:

commands:

- echo Build started on 'date

- echo Building the image...

- docker build -t steamhaus-lab/$APPLICATION_ NAME .

- docker tag steamhaus-lab/$APPLICATION_NAME: latest $REPOSITORY_URI: latest

post_build:

commands:

- echo Build completed on 'date'

- echo Pushing the Docker images..

- docker push $REPOSITORY_URI: latest

- echo Writing image definitions file...

- printf " I{"name" "gs", "imageUri":"%s"}]' $APPLICATION_NAME $REPOSITORY_URI: latest > imagedefinitions. json artifacts:

artifacts:

files: imagedefinitions.json Paul Flowers, 2 months ago • add approval

Build phases are composed of shell commands and the resulting artefact files are zipped up and uploaded to S3. In this example, the docker image is also pushed to ECR as part of the post_build step.

Take special note of the imagedefinitions.json artefact, which will be passed as an input to CodeDeploy to tell it which service to update.

CodePipeline NodeJS Deployment

The image produced by this pipeline is deployed to ECS Fargate via AWS CodeDeploy. CodeDeploy can perform deployments to both AWS services (ECS, EC2, Lambda) and on-premise using a CodeDeploy Agent. CodeDeploy also offers a Blue/Green deployment strategy for seamless releases that don’t interrupt service and Canary deployment for limited user testing.

Like CodeBuild, CodeDeploy procedures and configuration can be defined in the application repo or Terraform using an appspec.yml. This is useful if there are certain steps and configurations required during the deployment process. This file allows you to configure where on the filesystem the artefact should be deployed, as well as hooks for scripts to be triggered at various lifecycle events.

In our example however, using ECS Fargate and rolling deployments, the imagedef.json from the build output is enough as we are simply replacing the running container.

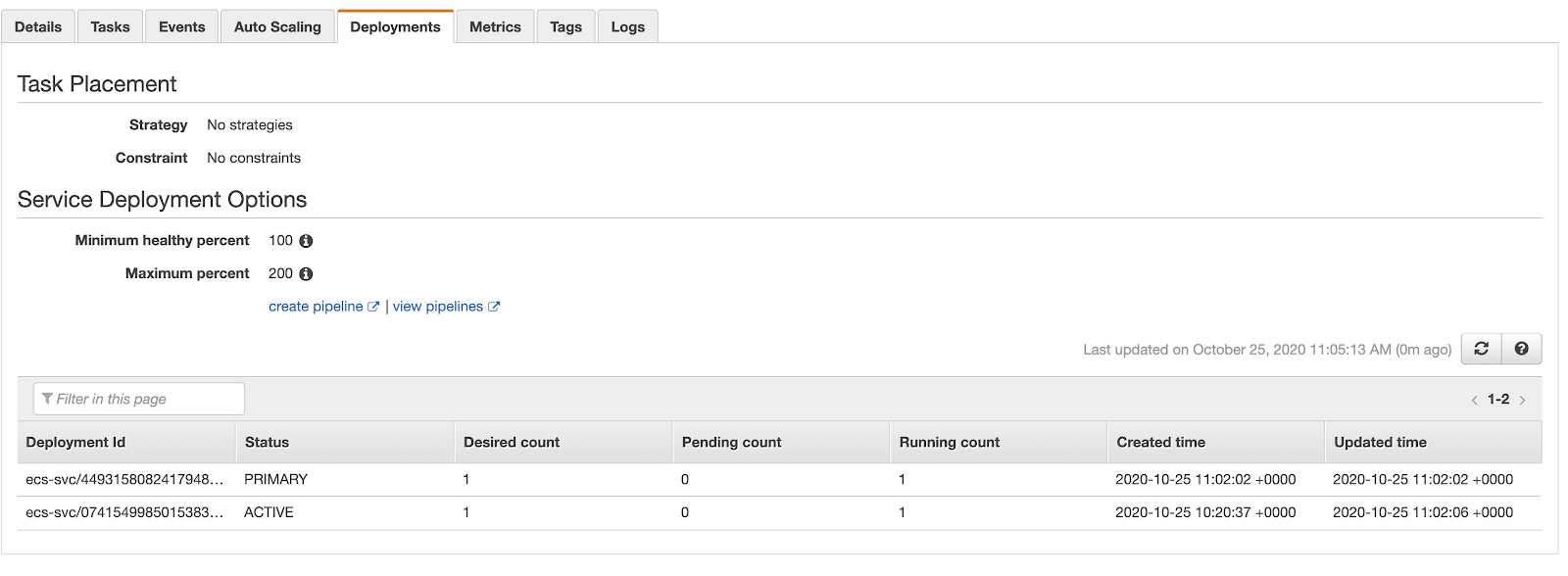

When CodeDeploy is triggered and the imagedefinitions.json artefact is passed over, an ECS service deployment is triggered. You can watch the process in the “deployments” tab of the ECS service that is being modified.

CodeBuild will display a success or failure message depending on the outcome. As it follows a create before destroy lifecycle, a deployment failure will not cause an outage, as traffic will continue to flow to the previous container and is only drained once the new image passes health checks.

CodePipeline Pros

AWS EcoSystem

A deployment system that has been built from the ground up to support AWS services offers a number of pain-free benefits:

- Multiple deployment options with native support for all AWS compute services.

- Using Terraform/Cloudformation to orchestrate entire pipelines as code.

- Securing pipeline services by locking down their IAM roles.

- Granting least privilege access to users.

- Monitoring, logging, and alert capabilities through CloudTrail and CloudWatch.

- Billing handled per-minute and explorable via Billing and Cost Management.

Integrations

CodePipeline comfortably balances configurability with a fully managed service.

Rather than relying on third-party plugins and modules to interact with cloud services. CodePipeline works natively with them and is supported directly by AWS.

CodePipeline also has the flexibility for you to break out to Jenkins or TravisCI if there are specific requirements or if you need a transitional period to gracefully migrate to a modern CI/CD system.

Visualisations One of my favourite parts of CodePipeline is its visualistions.

Being able to observe pipelines is vital to understanding and improving the delivery process.

CodePipeline enables you to split each build and test routine into several “stages” and pinpoint where in the process delivery failed, without having to dig through an entire build log.

CodePipeline Pain Points

The Learning Curve

If you are new to the AWS ecosystem, then there will be a lot of terminology used that you are unfamiliar with and learning about the compute side of AWS along with the delivery side could be quite daunting.

There are also some very specific use-cases for some of the delivery methods which are not fully documented and there are certain expectations for naming conventions, such as build steps and output artefacts. For example, imagedefinitions.json/imagedefs.json is a specific name which codedeploy will look for when executing.

Pipeline Organisation

In my opinion, CodePipeline has some maturing to do before it can be considered enterprise-ready. The main omission for me is the lack of organisation and hierarchy that can be associated with pipelines.

In Jenkins and Travis for example, you can configure pipelines as subtasks and have them cascade. Whereas CodePipeline will only ever present you with a flat list of jobs. You can cleverly work around this by setting up source triggers on the outputs of other jobs, but this can become quite cumbersome to manage.

CodePipeline Summary

If your company is in a position to adopt CodePipeline then I am sure you will stand to gain many benefits from doing so and save on a few headaches: billing efficiency, direct service integration, reduced management overhead.

However, depending on the complexity of your current build process and delivery mechanisms it may take a substantial amount of work to fully replace. Fortunately, this is where integrations with traditional runners pay off, in allowing you to phase your migration.

If organisational requirements are not a key part of your setup and you are already on your way to a cloud-native platform, I believe CodePipeline is a very worthwhile endeavour.

-min.jpg)

.webp)

.webp)

.webp)